Building an MRI Scanner 60 Times Cheaper, Small Enough to Fit in an Ambulance

/Join our 11th Annual LDV Vision Summit (free virtual event) on March 25, 2025, to be inspired by cutting-edge computer vision, machine learning and AI solutions that are improving the world we live in!

©Robert Wright/LDV Vision Summit

Matthew Rosen is a Harvard Professor, he and his colleagues at the MGH/A.A Martinos Center for Biomedical Imaging in Boston are working on applications of advanced biomedical imaging technologies. At the LDV Vision Summit 2017, he spoke about how he is hacking a new kind of MRI scanner that’s fast, small, and cheap.

It's really a pleasure to talk about some of the work we've been doing in my laboratory to revolutionize MRI, not by building more expensive machines with higher and higher magnetic fields, but by going in the other direction. By turning the magnetic field down and reducing the cost, we hope to make medical devices that are inexpensive enough to become ubiquitous.

MRI is the undisputed champion of diagnostic radiology. These are very expensive, massive machines that are really confined to the hospital radiology suite. That's due, in large measure, to the fact that they operate at very high tesla strength magnetic fields. If you imagine taking an MRI scanner and putting it in an environment like a military field hospital, where there may be magnetic shrapnel around, you could really injure someone or worse.

Our approach is to go all the way down at the other end of the spectrum, at around 6.5 millitesla, roughly 500 times lower magnetic field than a clinical scanner, and I'll talk about work we've done in a homemade scanner that's based around a high-performance electromagnet, with high-performance linear gradients for spatial encoding. You can't really just turn the magnetic field down of an MRI scanner and expect to make high-quality images. This really comes down to the way we make measurements in MRI.

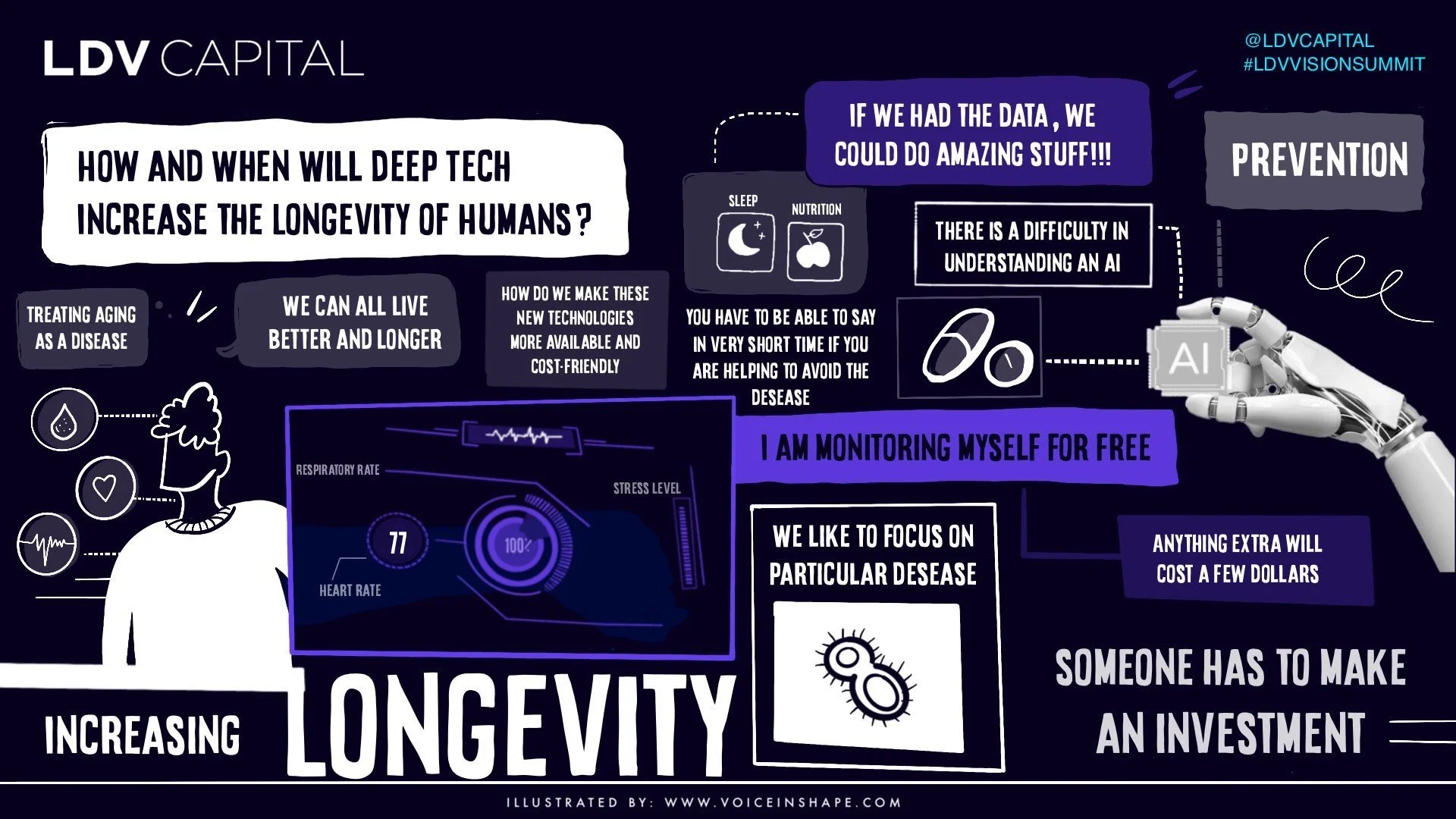

LDV Capital is focused on investing in people building visual technology businesses. Through our Vision events, LDV Insights, and LDV community we bring together top technologists, researchers, startups, media/brand executives, creators, and investors with the purpose of exploring how visual technologies leveraging computer vision, machine learning, and artificial intelligence are revolutionizing the way humans communicate and do business.

In our LDV Insights report on Healthcare in 2018, we projected the future miniaturization of hardware that will eventually improve medical diagnostics. Read our full report and check out a sneak peek on MRIs:

We use inductive detection. This is something you're all familiar with as a child, where you take a magnet and move it through a loop of wire, and you generate a voltage. In this case, the moving magnet actually comes from the nuclear polarization of the water protons typically in your body, and, in fact, what Richard Ernst calls "the powers of evil" has to do with the fact that nuclear magnetic moments are very, very small.

If you're interested in making images of this quality, over the span of a few seconds or minutes, it means you need to make this multiplicative term B, the magnetic field, very, very high. That means that all clinical scanners operate, in the tesla range, typically around 3 tesla. Knowing that, what sort of images do you think we'd be able to make at our field strength, roughly 500 times lower magnetic field, which is a calculated SNR of around 10,000 times lower? Well, you'd probably guess that we couldn't make very good images, and, in fact, you'd be right.

Up until a few years ago, these were the kind of images we were making in our scanner. This is, in fact, a human head, if you can believe it. It's a single slice, took about an hour to acquire, and nobody was very interested in this at all.

If this is all we had, I wouldn't be here today, so let me tell you how we solved these problems.

Really, how do you solve a hard problem? What we've been working on is a suite of technology, half of it based in physics, half of it based in the availability of inexpensive compute. The physics applications are really about improving the signal strength, or the signal-to-noise, coming out of the body and into our detectors, and then the compute side is really about reducing the noise or getting more information from the data we have, or fixing it in post, as some people in this audience might call it.

Let's start really at the beginning, our acquisition strategy. The way you do NMR, or at least the way we do NMR at, remember, very, very low magnetic fields with very, very low signals, is we take our magnetic field. We turn it on. In red is that very small nuclear polarization I talked about. We apply a resonant radiofrequency pulse. We tip the magnetization into the transverse plane, and then we apply a series of coherent radiofrequency pulses to drive that magnetization back and forth very, very rapidly.

Then, again analogous with this inductive detection approach, we detect our signal, but not using a giant hand and a magnet moving, but instead using a 3D printed coil, in this case around the head of my former colleague, Chris LaPierre, to detect this very, very small, but with a very high data rate signal. We call this Balanced Steady-state Free Precession. That's a bunch of words. What it really means is that we now have an approach to very rapidly sample this, although very, very small, signal coming from the head.

What this has allowed us to do is to make images like this. In six minutes, we can make a full 3D dataset, roughly 2.5 millimeter in plane resolution, 15 slices. Just remember, this is the same machine, okay? The difference between these images has to do with the way we interrogate the nuclear spins, the fundamental property of the body of water, in this case, and the way we sample it. That's pretty nice. Having a high data rate actually allows us to now build up even higher quality images by averaging, and those are some images shown here, but there are other approaches, and this is where we start really talking about compute.

Pattern matching is an interesting approach people are very familiar with in the machine learning world, but we all know about this from basic physics. As an example, think of curve fitting, which you could think of as pattern matching. Curve fitting, you have some noisy data, shown as open circles here. You have some model for the way that data depends on some property, say time, so you take your functional form. You fit that function to the data, and you extract not only the magnitude of the effect but also additional information, in this case, a time constant of some NMR CPMG data.

The MRI equivalent of pattern matching is known as magnetic resonance fingerprinting. In contrast to what we did above, where we add up all of these very noisy images to make a higher quality image, in this case, we don't average actually. We just acquire the raw data. You see the data coming into the lower left. These are very, very noisy, highly under-sampled images that normally you would sum together. The interesting thing we do here is we sort of dither the acquisition parameters a little bit. In the upper left, we show exactly how much we tip the magnetization, and in the upper right, we vary a little bit about the time in between individual acquisitions.

©Robert Wright/LDV Vision Summit

What do we do with this data? Well, here is one of those images. I'll plot the time dependence of the signal. We call that the fingerprint. Why do we call it a fingerprint? Well, very much analogous with the partial fingerprint, smudged fingerprint, you might find at a crime scene, there are lots of ridges and valleys and things that distinguish that information or that fingerprint. If you were trying to identify who this fingerprint belonged to, you would search your database, and then you would find, hopefully, a match, which gives you not only the complete fingerprint, which is interesting, but actually it's tied to a record, in this case, my collaborator, Chris Farrar.

What we do in this case, for the MRI equivalent, is we take our MRI fingerprint. We search a database, in this case, of precomputed NMR trajectories, which is the physics that defines how these magnetization depends as a function of time. We find our best match in red. That tells us not only the intensity, M0, of the signal at that particular pixel, but also other parameters, which in this case tell you about the local magnetic environment, both of the machine and of the body.

What does this compute-based pattern matching approach do for our data at low field? Well, in addition to giving us images, like on the first line, which are very similar to the last images I showed you, we get all of this additional information for free. In this case, it's quantitative information, again about the local magnetic environment of the tissue, so-called T1 and T2, as well as properties of the instrument and the local magnetic fields. Okay, so really compute with our noisy data--ah, thank you--compute with our noisy data actually allows us to have more information than we would get with a standard approach.

The last thing I want to talk about, really, is something that my collaborator talked about early on, which is a new thing that we've only talked about publicly for about a month, which is the idea of is there something to be learned from natural vision?

It comes down to a very interesting point, which is that the brain is really, really good at taking noisy data, especially in low light, and doing pattern matching on textures and edges, and at low field, we generate noisy data all day long, so can we take that low SNR data and process it in a framework that's based around the way the retina handles data through the neuronal currents into the reconstructing of a final image through perceptual learning, which is a data-driven, lifelong approach? Can we analogously build a way of handling the voltages coming out in our NMR coil and the actual data to reconstruct images, using a similar data-driven training approach?

©Robert Wright/LDV Vision Summit

We call that AUTOMAP, which is automated transform by manifold approximation. It's broader than MRI, but I'll talk about it specifically in this case. It allows us to recast image reconstruction as a supervised learning task. In this case, we train up a joint manifold. One manifold consists of the data, the voltages coming in from the scanner itself, and then the other manifold is the image representation of that.

The reason to do this, and we've built it up as a deep neural network. The reason to do this is that we can take that matrix of sensor data, those voltages coming in again from that inductively ... We're talking macroscopic things here, right? A coil wrapped around the head of a person in a magnetic field. Put that data in on the left side of this. Outcomes a reconstructed image, and the reason it does a good job, as I'll show you, is because it not only subsumes the mathematical transform between the sensor data and its final data, but it also takes advantage of properties of natural images, such as image sparsity.

Here's very quickly some examples of this. This is radially sampled MRI data, an SNR of around 100, and the conventional reconstruction, which is a complicated iterative reconstruction that looks like this. The same data, fed into AUTOMAP, reconstructs like this. I'm not just cherry-picking. It doesn't matter what acquisition strategy you use here. In all cases, you get superior immunity to noise, using this neural network-based approach to reconstruct these raw voltages into images.

The interesting thing about this, like all supervised learning approaches, is that it can learn any encoding. That makes it relevant beyond MRI, but also in MRI because there is a whole zoo of acquisition strategies that people use. This really reminds me of the Google DeepMind Atari Breakout program, right? Where that's interesting if you've seen this before. A neural network was taught to play Breakout, which is interesting enough, but actually, if you watch this for a while, you'll see that the neural network pretty quickly learned a really good acquisition strategy for playing the game, where it runs the ball up one side and uses the back wall to maximize its points.

Think about that for a minute. Are there optimal ways of sampling this data that we just haven't thought of? You can see, actually, all these encodings shown on the left side are geometric, radial, spiral, Cartesian. That's because we're logical people, and we think about things in terms of geometry, right? But if you let all the parameters run, you can imagine doing much, much better.

In conclusion, I've shown that MRI is possible outside the scanner suite, through a combination of physics and computing, both sensors and sequences, as well as these fingerprinting approaches and AUTOMAP. Now, what are the implications for health care?

Well, fortunately, as you can see the scanner in the upper right, we are not limited to the existing footprint of our test system. The physics and the compute are basically length and variant. They scale. You can build a smaller scanner that takes advantage of a lot of the innovations we've developed. It's really built around the idea of using inexpensive hardware with scalable, mostly GPU-based compute.

The question really is what is the clinical implication of time and resolution, because there's a trade-off between them. Our images will never be as good as a 3 tesla scanner. That's just physics, okay? But every day, clinicians make a decision between speed, specificity, resolution, and cost in medical imaging and in health care. A really good example of a highly optimized version of that is the stethoscope, right? That's a $50 object. Its resolution is like this if you even want to think of it as having a resolution, but in the hands of a clinician, it can tell if someone has pneumonia or cardiac arrhythmia.

Imagine if you could use the MRI scanner as a ubiquitous tool, say that's in a CVS Minute Clinic, military field hospital, sports arena, neuro ICU, chronic care conditions, or at home, monitoring, say, long-term effects of chemotherapy.

Imagine if you could use the MRI scanner as a ubiquitous tool, say that's in a CVS Minute Clinic, military field hospital, sports arena, neuro ICU, chronic care conditions, or at home, monitoring, say, long-term effects of chemotherapy. As long as the cost becomes low enough, and this metric of time versus resolution is positive, net positive, I think it's a really useful tool. This really reminds of, of course, everyone's favorite scene from Wall Street, right?

This is the first time pretty much anyone saw a cellphone, and that was sort of neat, but the cellphone, of course--and this audience knows this very clearly--the cellphone has become useful because it's ubiquitous. Everyone has one, and that's led to new ways of connecting between people.

Imagine what you can do, just adding layers of data mining and health care and telemedicine on top of the idea of these ubiquitous sensors. With that, I want to acknowledge my group members, both past and present, and, of course, our funding agencies, and you guys for listening. Thanks so much.