The Power And Promise Of Emotion Aware Machines

/This keynote is an excerpt from our LDV Vision Book 2015.

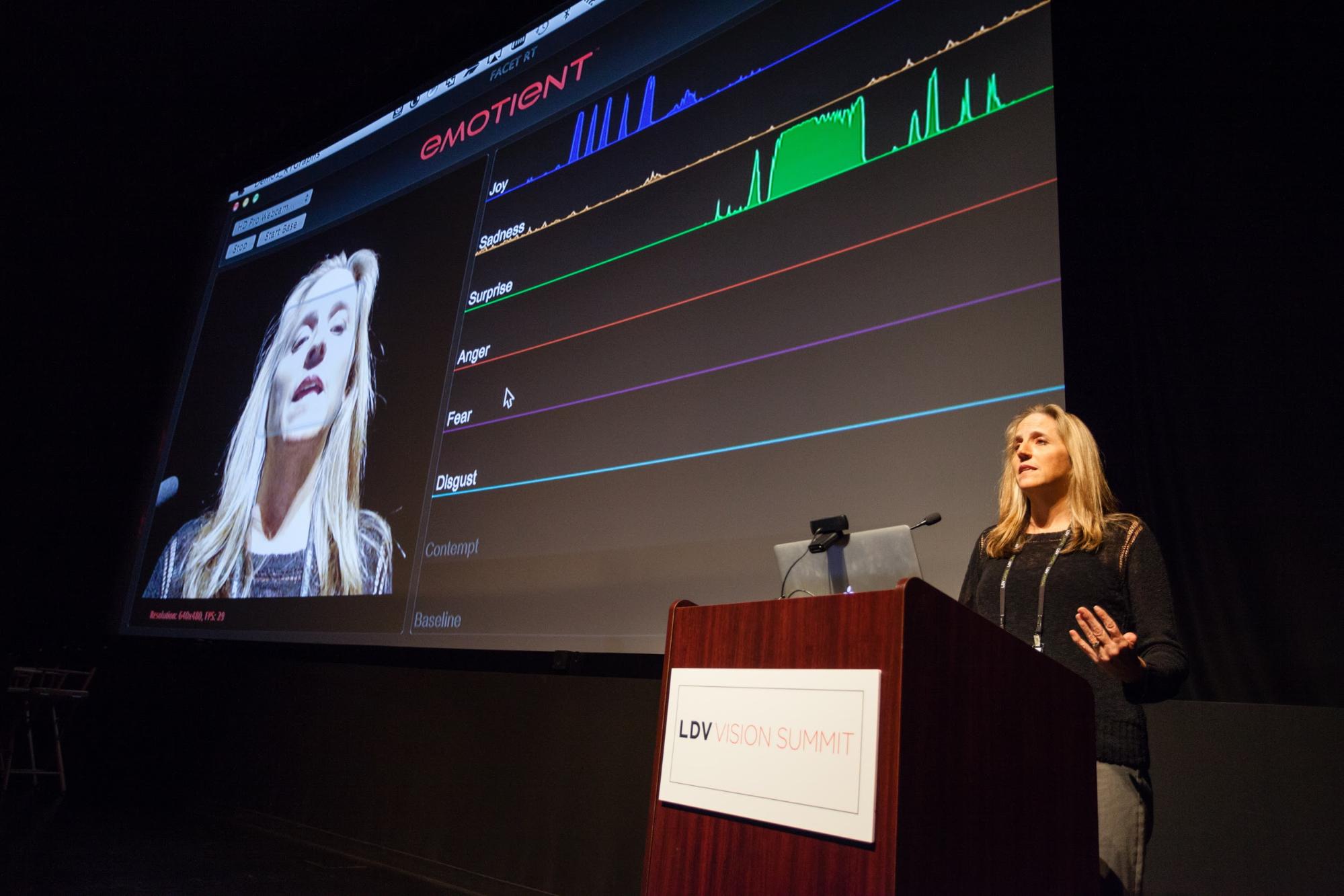

Marian Stewart Bartlett, Co-Founder & Lead Scientist, Emotient.

Apple acquired Emotient in January 2016 after she spoke at our 2015 LDV Vision Summit.

Technology that can measure emotion from the face will have broad impact across a range of industries. In my presentation today, I am going to provide a picture of what's possible with this technology today and also provide an indication of what's possible for the future, where the field may be going. But first I will show a brief demo of facial expression recognition.

You can see the system detecting my face and then when I smile, the face box changes blue to indicate joy. On the right, we see the outputs over time. Okay, so that's over the past 30 seconds or so. Next, I will show sadness.

That was a pronounced expression of sadness. Here is a subtle sad. Natural facial behavior is sometimes subtle but other times, it's not necessarily subtle. Sometimes, it's fast. These are called micro expressions. These are expressions that flash on and off your face very, very quickly. Sometimes in just one frame of video.

I will show some fast expressions, some fast joy. Then, also surprise. Fast surprise. Anger. Fear. Disgust. Now, disgust is an important emotion because when somebody dislikes something, they'll often contract this muscle here, the levator muscle, without realizing they are doing it. Like this. And then there is contempt. Contempt means unimpressed.

Some things that are possible today are to do pattern recognition on the time courses of this signal. If we take a strong pattern recognition algorithm, capturing some of the temporal dynamics, we are able to detect some things sometimes better than human judges can. For example, we've demonstrated the ability to detect faked pain and distinguish it from real pain and we can do that better than human judges. Other things that we can detect are depression, student engagement, and my colleagues have also demonstrated that we're able to predict economic decisions. I will tell you a little bit more about that decision study.

Facial expressions provide a window into our decisions. The reason for that is that the emotional parts of our brain and particularly the amygdala, which is part of the limbic system, plays a huge role in decision making. It's responsible for that fast, gut response, that fast value assessment that drives a lot of the decisions that we end up making. One of the other co-founders of Emotient, Ian Fasel, collaborated with one of the leaders in neuroeconomics, Alan Sanfey, in order to ask whether they could predict decisions in economic gains from facial expression.

©Robert Wright/LDV Vision Summit

They also compared the machine learning system to human judges looking at the same videos. What they found is that the human judges were at chance. They could not detect who was going to reject the offer. However, the machine learning system was able to do this above chance. It was 73% accurate. It was able to predict decisions in this game. They could also find out which signals were contributing to the decision, being able to detect the rejection in this offer. What they found was that facial signals of disgust were associated with bad offers, but they didn't necessarily predict rejection. What predicted rejection was facial expressions of anger. They could secondly ask which temple frequencies contain the most information for this discrimination. That is where they found that the discriminative signals were fast facial expressions. These were facial movements that were on the order of about half a second cycle. On the other hand, they found that humans were basing their decisions on much longer time scales. The way they did that, was they trained a second general boost classifier. But this time they trained it to try to predict the observer guesses. Then they went back and looked at which features were being selected. The observer guesses were being driven by facial signals on time scales that were too long.

©Robert Wright/LDV Vision Summit

There are many commercial applications of facial expression technology. Some of you may remember the Sony Smile Shutter. The Smile Shutter detects smiles in the camera image and that was based on our technology back in UCSD prior to forming the company. That was probably one of the first commercial applications of facial expression technology. What I've shown here on the screen is one of the more prominent applications at this time. This is an ad test focus group and here the system is detecting multiple faces at once and is also summarizing the results into some key performance indicators: attention, engagement, and sentiment.

Now where this is moving in the future, is that we're moving towards facial expression in the wild. We're moving towards recognition of sentiment out in natural context where people are naturally interacting with their content. Deep learning has contributed significantly to this because it has helped provide robustness to factors such as head pose and lighting to enable us to operate in the wild. This shows some of the improvement that we got when we moved to a deep learning architecture. Blue shows our robustness to pose prior to deep learning and then green shows the boost that we got when we changed over to deep learning with an equivalent data set.

Here is an example of media testing in a natural context. What we have is people watching the Super Bowl halftime in a bar. Watch the man in green. He shows some nice facial expressions in just a moment.

Next, we have the system aimed at a hundred people at once during a basketball game. Here we are gathering crowd analytics and getting aggregate information almost instantly and it's also anonymous because the original video can be discarded and we only need to keep the facial expression data. Here we have a crowd responding to a sponsored moment at a particular basketball game.

There are also a number of applications of this technology in medicine. The system is able to detect depression and it can be employed as a screening mechanism during tele-medicine interviews, for example. It can track your response over time, your improvement over time, and also quantify your response to treatment.

©Robert Wright/LDV Vision Summit

Another area where it can contribute in medicine is pain. We can measure pain from the face. It's well known that pain is under-treated in hospitals today and we have an ongoing collaboration with Rady Children's Hospital where we have demonstrated that we can measure pain in the face postoperatively right in the hospital room. Now this contributes both to patient comfort but also to costs because under-treated pain leads to longer hospital stays and greater re-admission rates.

©VizWorld